What are AI multi agents for evaluations?

AI multi-agents are a system of AI agents that work collaboratively to conduct evaluations of LLM outputs, as close to human evaluations as possible.

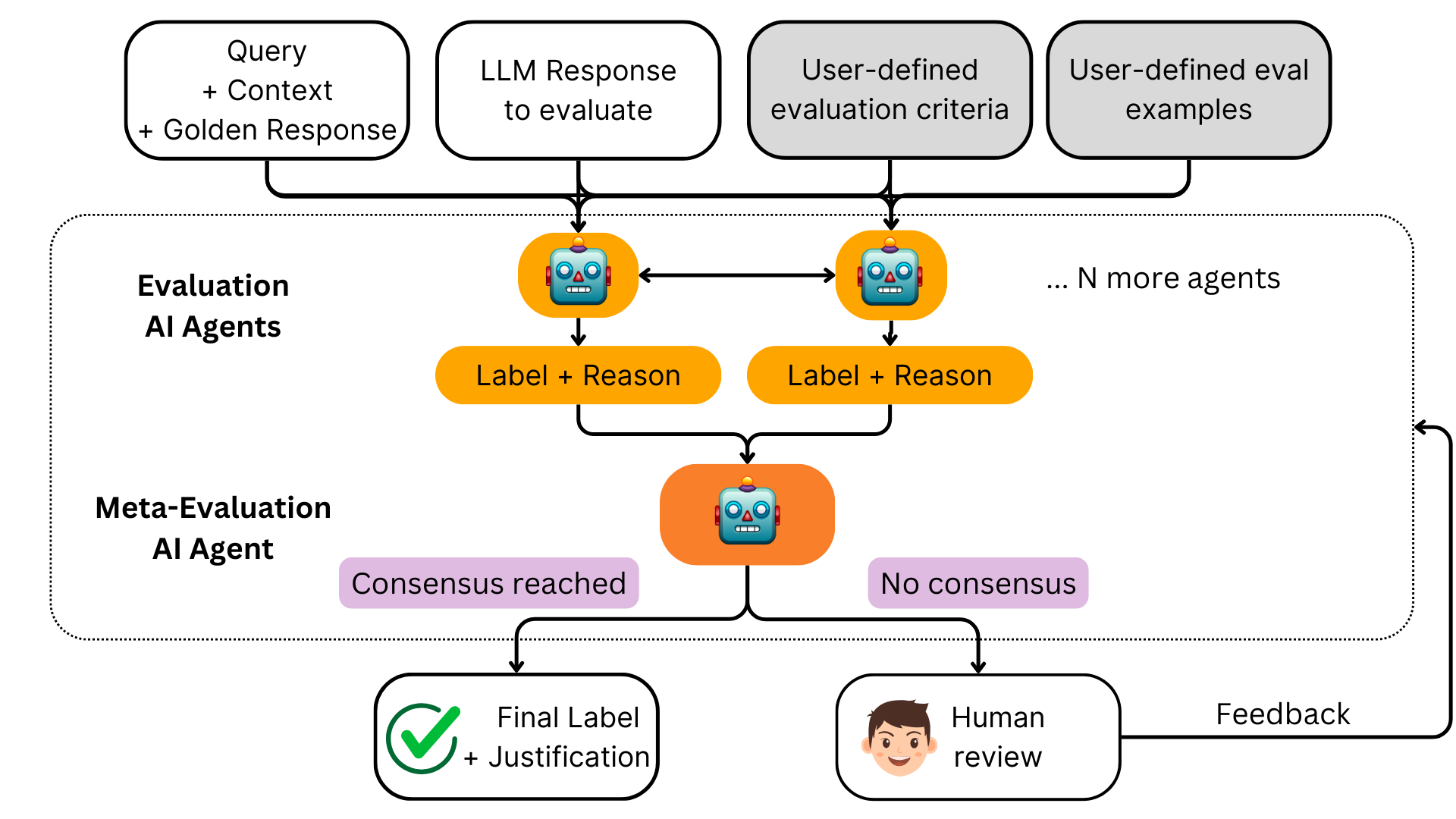

A hierarchical multi-agent architecture consists of multiple evaluation AI agents and one meta evaluation AI agent:

- The AI evaluation agents discuss and debate over multiple rounds to individually give a label and a reason for each data point.

- The meta evaluation AI agent makes the final decision of the label, or decides to ask the human instead. The meta evaluator also evaluates the quality of the evaluation agents.

How do agents learn to mimic human evaluations?

AI agents are first trained/seeded with human-curated labeled examples, which act as training data to inform the AI agents' evaluations. Next, AI agents can learn from human feedback to fix errors that they make. This is arguably the most powerful aspect of the AI multi-agent system. This improves the agents with feedback over time.

Why use agents, instead of LLMs, for evaluations?

Agents are preferred over LLMs to do evaluations because:

- AI multi-agents learn to mimic human evaluators.

- Multi-agent evaluations are more accurate than using a single LLM as a judge.

- Agents debate over multiple rounds to do evaluations, thus being able to correct errors made in previous rounds.

- Agents can ask human to make the final decision when it’s not certain to provide a label, thus eliminating uncertainty errors.

- The accuracy of the agent evaluators improves over time with human feedback.

You may note that the most basic version of a multi AI agent system with only a single judge agent (and no meta evaluation agent) and one discussion round defaults to an LLM-as-a-judge; thus multi-agent systems are a superset of LLM-as-a-judge.

What are evaluation AI agents?

Each AI evaluation agent is responsible for providing a label and reason to each data point being evaluated.

First, each agent independently provides a label and reason.

Next, each agent discusses and debates over multiple rounds with the other agents to individually give a label and a reason for each data point. The agents often change their labels based on the discussion and debate with the other agents, and this leads to improved performance.

What is a meta-evaluation AI agent?

The meta-evaluation AI agent does three things:

- Makes the final decision of the label, or decides to ask the human instead.

- Evaluates the quality of the individual evaluation AI agents.

- Learns from human feedback.